yasmeeta

-

Supabase Secures $100 Million Funding at $5 Billion Valuation, Embraces Vibe Coding

Audio producer for TechCrunch, Theresa Loconsolo, has been captivated by the amazing ascent of Supabase. This open-source, no-SQL database platform is quickly establishing itself as the backend of choice for the new vibe-coding community. Based…

-

Nordic Startups Thrive as Founders Embrace Risk and Innovation

Theresa Loconsolo, an audio producer at TechCrunch, recently highlighted the evolution of the Nordic startup ecosystem in a conversation hosted on the network’s flagship podcast, Equity. Loconsolo, of Freehold, NJ, received her Communication degree from…

-

Major Funding Rounds Propel Growth of AI Startups in 2025

In 2025, a new hurdle emerged as a massive wave of investment flooded into artificial intelligence (AI) startups. Several of these companies hit funding rounds of more than $100 million. This influx of capital is…

-

Stickerbox Revolutionizes Creativity with AI-Powered Sticker Maker for Kids

Stickerbox an innovative new sticker play system for kids has officially hit the shelves. It offers an exciting new vision for the intersection of creativity and technology. This $99.99 gadget, resembling a small, bright red…

-

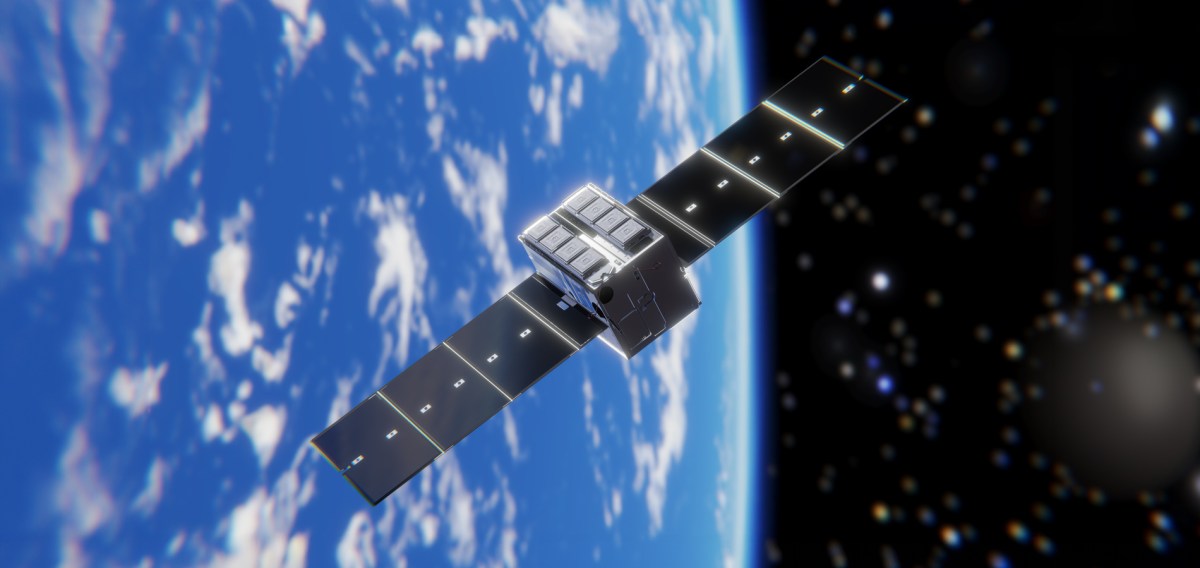

Startup Fleet Space Leverages AI and Satellites to Expand Lithium Deposit in Quebec

Fleet Space, an innovative startup based in Australia, has made significant strides in the exploration of lithium resources by employing satellite-powered artificial intelligence (AI) technology. The company got leadership’s support as they greatly increased the…