General

-

Imagen 3 from Google Offers New Editing Features for Creators

Google Cloud has integrated its newest AI models, Veo and Imagen 3, into Vertex AI, its platform for AI application development. This development makes Google the first major cloud provider to offer a video generation…

-

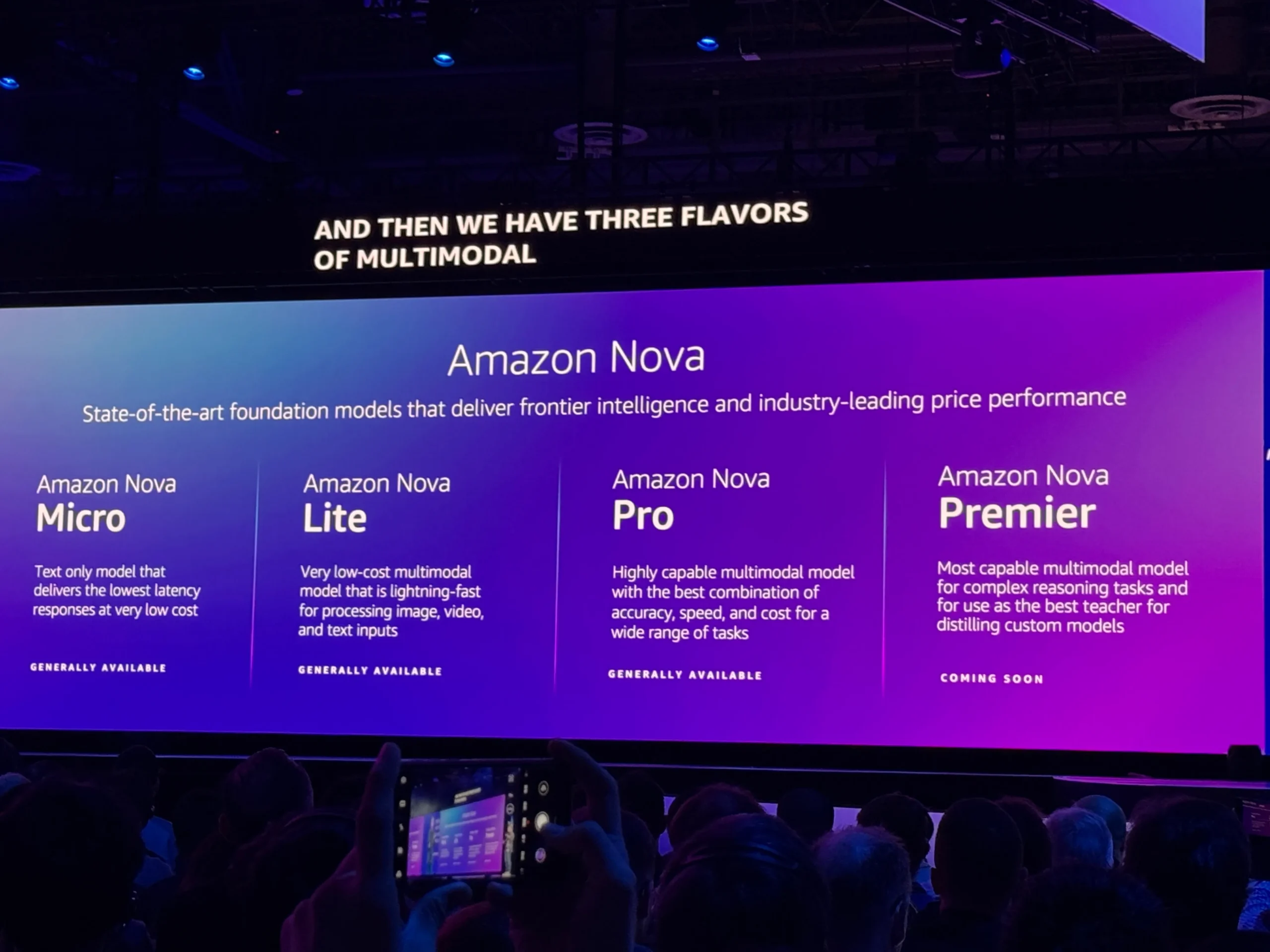

Amazon Launches Nova AI Models for Text, Image, and Video Creation

Amazon has unveiled its Nova AI model family at the annual AWS re:Invent conference, marking a significant expansion of its capabilities in the generative AI landscape. CEO Andy Jassy announced that Nova empowers users to…

-

Yurts Secures $40 Million to Revolutionize AI for the Department of Defense

Yurts, an AI integration platform focused on high-security businesses, has raised $40 million in Series B funding to enhance its role as a key AI assistant for the Department of Defense (DoD). The funding round,…

-

Startup Lizcore Secures Funding to Boost Indoor Climbing Technology

Lizcore, a Spanish startup focused on enhancing indoor climbing experiences, has secured €600,000 in pre-seed funding as it works to commercialize its innovative tracking and safety technology. The investment comes from a mix of venture…

-

ASL Aspire Brings Game-Based STEM Learning to Deaf Students

Poor literacy skills have long challenged the deaf and hard-of-hearing community, with median literacy rates among deaf high school graduates stuck at a fourth-grade level for over a century, according to the National Center for…