The latest contender to ChatGPT, known as Claude 2, has officially entered the open beta testing phase

On Tuesday, Anthropic unveiled Claude 2, a substantial language model (LLM) akin to ChatGPT, proficient in coding, text analysis, and composition creation. In contrast to the initial Claude version launched in March, users can now explore Claude 2 freely on a new beta website. Additionally, it is accessible as a commercial API for developers.

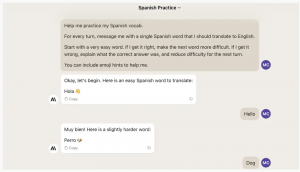

Anthropic asserts that Claude is engineered to emulate conversations with a supportive colleague or a personal assistant. The new iteration incorporates valuable feedback from users of the preceding model, emphasizing its ease of interaction, clear articulation of reasoning, reduced propensity for generating harmful content, and an extended memory capacity.

Anthropic asserts that Claude 2 showcases notable advancements in three crucial domains: coding, mathematics, and reasoning. They note, “Our latest model achieved a 76.5% score on the multiple-choice section of the Bar exam, a marked improvement from Claude 1.3’s 73.0%.” Furthermore, when compared to college students applying for graduate programs, Claude 2’s performance places it in the top 10% on the GRE reading and writing examinations, with a comparable standing to the median applicant in quantitative reasoning.

Claude 2 boasts several significant improvements, including an expanded input and output capacity. As we’ve previously discussed, Anthropic has conducted experiments enabling the processing of prompts containing up to 100,000 tokens, allowing the AI model to analyze extensive documents, such as technical manuals or entire books. This extended capability also applies to the length of its generated content, facilitating the creation of longer documents.

Regarding its coding prowess, Claude 2 has exhibited a notable increase in proficiency. It achieved a higher score on the Codex HumanEval, a Python programming assessment, elevating from 56 percent to an impressive 71.2 percent. Similarly, in the GSM8k test, which assesses grade-school math problems, Claude 2 improved its performance from 85.2 to 88 percent.

A primary focus for Anthropic has been refining its language model to reduce the likelihood of generating “harmful” or “offensive” outputs in response to specific prompts, although quantifying these qualities remains subjective and challenging. An internal red-teaming evaluation revealed that “Claude 2 delivered responses that were twice as benign as Claude 1.3.”

Claude 2 is now accessible to the general public in the US and UK, serving individual users and businesses through its API. Anthropic has reported that companies like Jasper, an AI writing platform, and Source graph, a code navigation tool, have already integrated Claude 2 into their operations.

It’s crucial to keep in mind that while AI models like Claude 2 are proficient at analyzing lengthy and intricate content, Anthropic acknowledges their limitations. After all, language models occasionally generate information without factual basis. Therefore, it’s advisable not to rely on them as authoritative references but rather to utilize them for processing data you provide, especially if you possess prior knowledge of the subject matter and can verify the results.

Anthropic emphasizes that “AI assistants are most beneficial in everyday scenarios, such as summarizing or organizing information,” and cautions against their use in situations involving physical or mental health and well-being.